The Vital Need for an Ethical Center in the Innovation Economy

Recently, an internal memo surfaced at Google, causing a considerable amount of controversy. The memo revealed details of Dragonfly—a codename for a project that would allow Google to censor search results in China and share user locations with the government. Google acted immediately. In other words: they immediately scrambled and did just about anything they could to cover their ass. Google execs dispatched an army of HR managers to find out who had accessed the memo and reign in its spread. Instead of confronting the disturbing reality of what they were doing, the most powerful company in the world focused on sweeping the problem under the rug.

The approach deployed by Google is what we have come to expect from Silicon Valley. The sycophantic tech media just prints what they are told (looking your way, TechCrunch) and does little in the way of investigative reporting—not to mention holding the tech community accountable. These factors combine to pave the way for the widely accepted philosophy of “Innovation at any cost” disguised as technological solutionism—the modus operandi of the technology industry. The consequences are not always as grave, because few companies wield power comparable to Google’s. But the utter lack of ethics in product decisions is visible across the board: from fledgling startups to behemoths, asking about the human consequences is just not part of the process.

Ethics: Dust in the (Disrupted) Wind?

Have you ever stopped to consider the word ‘disruption?’ It means to break apart or to throw into disorder. It’s a pejorative term, fetishized by tech. Entrepreneurs and venture capitalists founded the entire post-dotcom bubble industry on the pursuit of disruption—and they succeeded. Social media platforms have disrupted democracy. Uber has disrupted transportation. Airbnb has disrupted rental markets. Amazon has disrupted retail, at the expense of many employees who struggle to make ends meet. There’s no question that tech companies create powerful, useful products—but disruption has no ethics. And, as Albert Camus observed, “a man without ethics is a wild beast loosed upon this world.” The ‘beasts’ of Silicon Valley exploit people—customers, workers, society—to innovate. But, the reality remains: there’s a point when further innovation without ethics will become societally unsustainable—and we’re treading thin, thin water. So when does this come to a stop?

Careful, Your Glitches Are Showing

Om Malik, a long-time chronicler of the tech industry, caught the essence in his remorseful New Yorker essay:

“Silicon Valley’s biggest failing is not poor marketing of its products, or follow-through on promises, but, rather, the distinct lack of empathy for those whose lives are disturbed by its technological wizardry.”

People who build products don’t care about the consequences. No one asks them to. They work in an environment where the dominant philosophies are “move fast and break things,” and “growth solves all the problems.”

In his book Hooked—which pretty much serves as a guide to making addictive products—Nir Eyal advocates “hooking” people on products by exploiting their feelings of fear, loneliness, and boredom. Have his methods been shunned as unethical? Of course not. Hooked is one of the most often recommended books for product managers. Why help users, when we can manipulate and exploit them?

These factors combine to pave the way for the widely accepted philosophy of “Innovation at any cost” disguised as technological solutionism—the modus operandi of the technology industry.

Beware the Algorithm

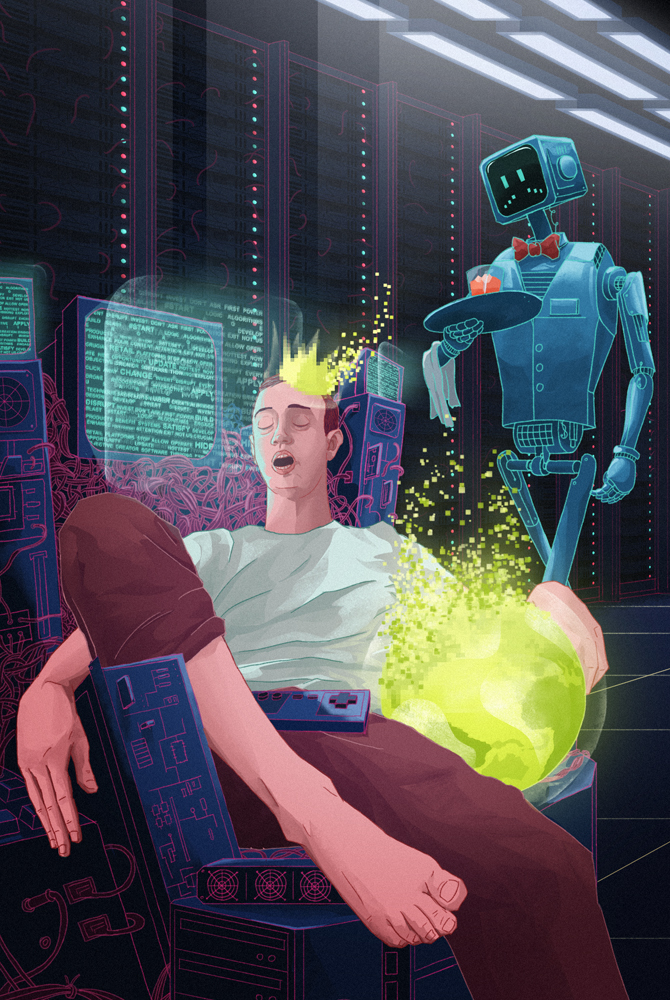

To improve as a discipline, product development must incorporate ethics as an element equally important to research, design, and go-to-market strategy. This is especially crucial now, as software continues to become even more powerful due to huge advancements in artificial intelligence and machine learning.

We’ve already seen what damage algorithmic products can do when ethics are not in the scope of a project. The Brexit referendum and the American presidential elections of 2016 showed how Facebook’s news feed algorithms can be gamed by companies like Cambridge Analytica and trolls spreading fake news. Years ago, Flickr was blasted in the news for their auto-tagging feature. The algorithm tagged a photo of a concentration camp as jungle gym, and a person with a dark complexion as an ape.

A recent analysis by Media Matters exposes how YouTube’s recommendation algorithms push viewers towards more and more radical content, pouring gasoline on the flames of populism and conspiracy theories. AI-driven algorithms like these are good for business for two reasons. First, they optimize engagement. Engagement means attention, and attention means money. Second, algorithms give companies plausible deniability. We’ve seen that before, like when Mark Zuckerberg employed the “it’s the algorithms, not us” defense in the wake of the US presidential election results.

When tech companies land in hot water, they often blame the machine. But it’s a facetious and misleading argument. Kickstarter’s former data chief Fred Benenson refers to this phenomenon as “mathwashing:” the tendency to blindly venerate algorithms, treating them as entirely objective. Pro-tip: they aren’t—they all have the biases of their creators baked in. Stanford philosophy professor Ken Taylor explains it well:

“Although some programmers may think of themselves as young Mr. Spocks—all logic and no emotion—in the end they are just humans too. And they are unfortunately just as prone to bias and blinders as the rest of us. Nor can we easily train them to simply avoid writing their own biases into their algorithms. Most humans aren’t even aware of their biases.”

These algorithms are the hottest class of products in Silicon Valley right now and they are transforming retail, healthcare, financial services and even the military. Countless product teams are currently tinkering with AI solutions, from customer service to facial recognition—and we desperately need them to be responsible, ethical, and empathetic.

If free office snacks and yoga classes are viewed as vital, what about discussions on ethical behavior, business practices, management and product design?

Ethics: A Responsibility, Not Just a Right

Do you know why American law schools are required to teach ethics? Because of Watergate. The scandal that toppled Nixon’s presidency implicated 21 lawyers, including two attorneys general and Richard Nixon himself. In the aftermath, it was decided that people with this much power have to study ethics to understand the consequences of their actions.

These days, innovators and technologists have also grown to wield enormous power. There’s a computer on every desk, in every pocket, and soon enough on every wrist; not to mention the ones in cars, appliances, and the drones we see more and more flying over our heads. There are platforms that let us put software products a click away from literally billions of people. We should stop thinking of this as an opportunity to exploit them while hiding the truth behind the tiny text in mammoth user agreements.

Innovation can exist without exploitation. But to make it so, we need to change our thinking and design principles. We need to come to grips with how our innovations impact the world around us. So how do we do that?

Humans First

There are thousands of books, talks, and presentations about product management—but few of them talk about ethics. A good starting point is human-centered design, a philosophy and method of problem-solving that is just as it sounds: centered around the needs, requirements, and desires of users. When products and systems are designed following this approach, they are accessible, sustainable, efficient, and effective; and their users are satisfied and even report an improved sense of well-being. If you’re interested in learning more, IDEO offers a good primer on the topic.

Unfortunately, even tools like these, focused on understanding and empathizing with the people we’re building the product for, are easily warped. It’s not like Facebook, Google, or Uber aren’t aware of these techniques. They know them well and use them—for their own benefit: they employ empathy as a bridge to manipulation.

Is Your Product Ethical?

We’ve come up with six questions to help you figure out if you’re developing products ethically.

- Is the product addictive?

It’s okay to make products that are fun and engaging. In many categories, like games or social apps, it’s required. But engagement does not equal exploiting the way our brains work. That’s what slot machines do. For example, according to multiple users sharing their experiences, Tinder sends fake notifications saying you’ve been liked by someone to lure you back into swiping.

Engagement is good. Bait-and-switch is vile. It’s just that simple.

- Does it create a false sense of urgency?

Technology creates an unbearable amount of noise, from beeps and boops, to notifications firing every few minutes, and dozens and dozens of emails we receive daily. It’s been proven that these alerts and this level of connectivity cause undue stress and fatigue and the context switching literally makes us dumber. Apple’s insistence on using red in notification badges is an example of false urgency. An alarming visual cue should not be used unless there is cause for alarm.

- Can it induce anxiety?

Almost no one willingly builds products that make people feel bad. But in the pursuit of growth and engagement, it’s easy to forget about users’ well being. Some of it ties to the previous two points—addiction and stress both lead to anxiety—but it’s about so much more than that. If you’re creating content, did you take precautions to make sure you don’t offend? If you’re building a community, do you have clear policies—and are you enforcing them? Twitter’s slowness in that regard made it a heaven for trolls, racists, misogynists and abusers—something the company claims to be trying to fix with negligible results, after many years and multiple attempts.

Things are seldom black and white. You want to build good, useful products—but they won’t be that for everyone. The sooner you realize the potential negative consequences of what you’re making, the better situated you’ll be to prevent them. The Stoics referred to this as premeditatio malorum (literally: a premeditation of evils); nowadays, project managers and psychologists know it as a ‘premortem.’ No matter what you call it—and as ironic as it may sound—envisioning and playing out the ‘worst case scenarios’ ahead of time actually puts you in a favorable position to get ahead of them, should any of them rise up.

- Is it inclusive and accessible?

This is especially important in the field of AI, but the point stands across all of product management: people of different ages, genders, and states of mental and physical health will use your product. Don’t assume your experience is relevant to them.

- Do your users know and understand how you handle their data?

Users shouldn’t have to dive into a wall of legalese in search for details about how your service handles their personal information. This is a phenomenon captured in its absurd glory by Dima Yarovinsky in his art installation “I Agree,” which demonstrates the lengthy and at this point infamous “Terms of Service” used by leading social media companies. Services also shouldn’t force their users to navigate a maze of options just to change their privacy settings.

What type of data you collect, who are you sharing it with, and why should all be crystal clear up front. Features reliant on data collection should be opt-in, rather than opt-out. And don’t be a creepy scumbag like Facebook with their habit of reading through your WhatsApp and Instagram contact lists and suggesting friends based on that.

You wouldn’t want your information to be collected and sold without your knowledge and consent. Don’t do that to your users.

- Is it fair?

There’s nothing wrong with drinking your competition’s milkshake if your product is better. But there’s everything wrong with approaching product development with an overarching sense of pure exploitation. Uber’s platform mediates an unfair relationship between riders (those who are enjoying an easily accessible and cheap service) and drivers (who are not offered any work protections and are dependent on the ranking system). Airbnb pushed many cities towards a short-term rental frenzy, making them considerably less liveable for long-term residents and citizens. Fiverr triggered a race to the bottom among freelancers.

You should be building a valuable service, not a zero-sum game.

A good starting point is human-centered design, a philosophy and method of problem-solving that is just as it sounds: centered around the needs, requirements, and desires of users

Office Perks: Starting the Conversation

This is by no means a comprehensive list of considerations, but it starts the conversation. We barely touched on the corrupt business practices, exploitation of workers, #Metoo, or the sickening culture of elitism, misogyny and racism of the Valley. But these too are a sickness that must be cured in the boardrooms, laboratories and sales desks of our new economy leaders.

The tech field is experiencing a Nixonian moment. It’s time for a full-throated discussion, and it’s time for aggressive action. A great place to start is in ensuring our innovation factories in the likes of Stanford, Harvard, MIT, UCLA, Oxford, and elsewhere begin teaching ethics to future developers, designers, and entrepreneurial captains of industry. It’s time for all those who oversee or work directly in product design—from engineers to the CEOs of professional organizations—to push for more ethical practices and standards in a robust and meaningful way. And it’s imperative to see similar educational efforts and forums by the likes of major accelerators such as TechStars and Y Combinator, as well as companies like WeWork—which have a direct interface with tomorrow’s innovation leaders. If free office snacks and yoga classes are viewed as vital, what about discussions on ethical behavior, business practices, management and product design?

The tech industry once inspired optimism, but it lost people’s trust—and for good reason. Apple, Google, Facebook, and other similar companies treat all of us as resources to exploit and mine either for attention or data (and in most cases, both). Uninformed political and civic leaders and fawning tech media abdicated their roles as protectors of social good. Founders and CEOs are laying society to waste for higher share prices the same way strip mining companies once left the landscape barren and polluted.

It’s essential that the innovators, technologists, and disruptors among us try to comprehend the impact of the changes we have or will unleash on our world. We must empathize with those who now feel lost, hopeless, and angry, and try to repair some of the damage caused by our disruption. As my college advisor Howard Zinn so perfectly surmised, “You can’t be neutral on a moving train.”

It’s time to disrupt how we disrupt.

F. Marek Modzelewski (GM of Treeline) & Wojtek Borowicz (Techie, Writer & Editor of Inner Worlds and Does Work Work)

Illustration by Bartosz Lis.